Kenshoo has a forecasting tool that has historically been designed and built by some of the smartest people at the company—the research group. They’re super smart people working on our state-of-the-art algorithms. They spend their day talking about Bayesian statistics and modeling, covariants, regression analysis, and indices. They’re the type of people you want on your side--especially when you’re building out a successful marketing platform.

One of Kenshoo’s most powerful tools—Halogen—was the research team’s birth child, allowing our clients to perform forecasting based off of historical performance, seasonality, and external events. While those clients that were using Halogen were seeing major results (20% time savings and increased revenue by 53% with budget increases of 36%). All of the success was being overshadowed by the poor user experience and inability to read and interpret the simplest of questions: “if I Cost $X, what can I expect in return.” Almost every interaction with the tool required one of our research teams to intervene and help guide the clients on how to “correctly” use the tool.

Needless to say: this approach was unsustainable and costly. So together with the Product and research teams, client design partners, and internal teammates, the UX team set out to redesign the user experience to simply and beautifully illustrate the power of Halogen’s forecasting. Although, I’d love to tell you about all the fascinating design work involved in that redesign, this is a blog post and not a novella. ;-)

After one round of testing, the research highlighted that the existing graph was incomprehensible—a conclusion the design team has always felt strongly about--but hey, what do we know? We’re not the best at maths. However, now we had the client’s voice and qualitative data to back it up. This is the story of the challenge we faced, how we went about testing the new prototypes, and the design that ultimately won out.

The Goals

Like with every research project, it’s best to start with outlining the goals and objectives of the research—what are our assumptions, what do we believe to be true, and above all, what is it that defines success for the design.

We landed on three main goals for the graph and how those translated to research goals in order of priority:

Comprehension is King. Can the person understand what the graph is telling them and use the graph to derive the correct answer without interaction?

Error avoidance. Which version of the graph will help avoid common “errors” or pitfalls experienced by clients by setting an unrealistic target?

Shareable. The graph will almost always be shared internally by our users to gain permissions and sign off prior to moving forward with the recommendation. Is the graph “shareable” and easily understood without much need for supplementary information?

There were other secondary goals for the research such as: finding where people will look first, tracking their visual path in reading the graph, understanding where they might click or hover to denote desired interaction patterns, etc. But for the purpose of this micro-test: we focused on the primary goals above all others.

The Contenders

The O.G. (Original Graph)

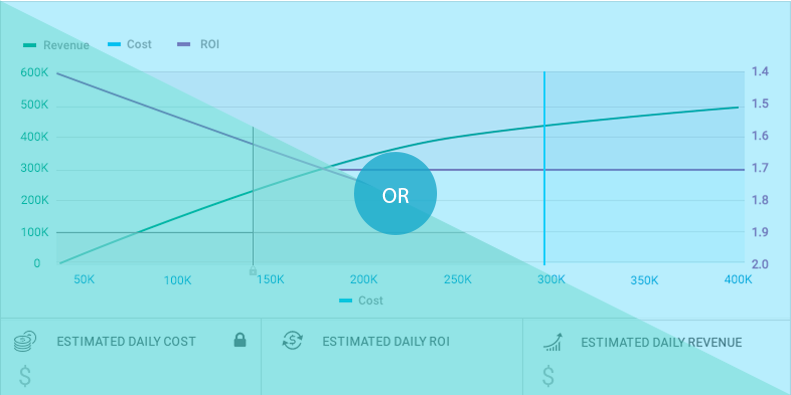

The O.G. (Original Graph) was a bit traditional in nature--opting for triple axes and double lines to show the inverse relationship of Cost to ROI and highly dependent on interaction to decipher his meaning.

The NGTOB

The new graph on the block (NGTOB) took a simpler approach--focusing just on one line; however, in order to do this and still show the inverse relationship of ROI to Cost, the ROI axis on the right was inverted (descending from bottom to top rather than ascending).

The Test

In order to test the goals, we decided on a cognitive walkthrough of each of the graphs that included a series of tasks whereby the participant needed to use the graph to fill in the missing information. Since the emphasis was not on interaction and instead just the graph itself, we used static screenshots in a basic powerpoint to walk the various participants through the exercise. To counter order bias, we randomized the order in which the graphs appeared to the different participants.

Here’s just a few of the questions that we outlined in the discussion guide:

Prescreening questions:

Do you currently use Halogen?

If so:

What do you think of the tool?

How are you using it today?

When was the last time that you issued a forecast/plan?

If not:

Do you know what Halogen is?

Have you used halogen before?

How are you doing forecasting today?

Cognitive Walkthrough Questions:

What is this graph showing you?

What do the shaded areas represent?

What do you think the green line represents?

In one: what do you think purple line represents

What does the right axis represent?

What does the bottom axis represent?

What does the left axis represent?

What do you think the grey/colored areas are (depending on the graph being displayed)?

Tasks Walkthrough (done on each graph)

Dual Line Concept:

You have just went through the new KPO porfolio set up flow, and you've configured a constraint of achieving a minimum ROI of 1.5 and another constraint of a maximum daily budget of $140K. Using the graph, tell me what is your maximum Revenue you can achieve given those constraints?

Single Line Concept:

You have just went through the new KPO porfolio set up flow, and you've configured a constraint of achieving a minimum ROI of 1.7 and another constraint of a maximum daily budget of $300K. Using the graph, tell me what is your maximum Revenue you can achieve given those constraints?

On a scale of 1(completely confusing) to 10 (super intuitive), how would you rate the overall intuitiveness of the graph?

At the end:

How might you change the graph to be clearer or more intuitive?

Do you think the graphs were showing you the same information? Why/Why not?

Of the two graphs, which do you think was clearer to you in it's meaning? Which do you prefer?

The Findings

Plot twist! Neither graph “won.” Instead it was pointed out that both graphs had their shortcomings. While the O.G. scored the lowest on “perceived” intuitiveness by participants at 5.2 (eek!), the NGTOB scored only marginally better at 6.5. Both scores were not good enough to go to production.

Sidenote on intuitive ranking and “scores”: intuitive ranking isn’t actually a quantitative metric (appearances are deceiving). It’s a qualitative metric that is aimed at providing a clear indication of preference, helping uncover additional ideas, and pinpoint biases. As with any qualitative point, you have to take the feedback with a “grain of salt” and plenty of follow up questions. The idea behind asking participants to do the ranking is to highlight the following:

Clearly identify the disparity between the tested concepts

Start a discussion on what would “make it a 10” (people can generally reflect and give good suggestions after they’ve given a rating)

Uncover any additional biases not in the rating but in their explanation of why they may have given that score.

Clearly understand the individual and group preference

Identify any outliers in the study

The UX Research team compiled all the findings for each concept by participant using the rainbow spreadsheet methodology for compiling and highlighting usability testing feedback.

Rainbow spreadsheet outlining the observation, the concept/graph the observation applied to and the participants to which the observation pertained.

Back to the drawing board

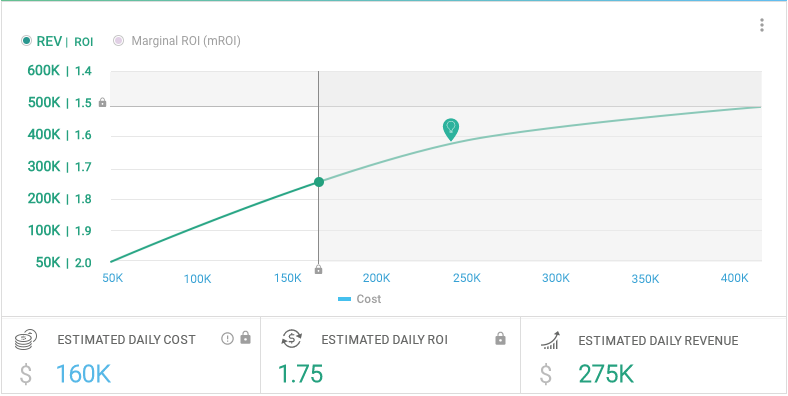

Addressing the pros & cons of each of the designs, the UX research team reviewed the findings and quickly mocked up a third iteration based on the feedback from the participants. The “Goldie Locks Graph” factored in the simplicity of the single line graph while addressing the confusion caused by three axes, minimizing the confusion of the descending axis, and highlighting the inverted relationship.

Best of all, participants validated the combined approach and rated the new concept significantly higher in terms of perceived intuitiveness with a score of 8.4.

The end…

At the end of the day, we tested, measured, and learned—embracing the best of lean and agile principles. The most important lesson we learned was to never hold dear your designs. Design is in its nature iterative and evolutionary. We must as designers test our own designs so that we can improve not only our own practice but our end-users experience.